Indoor Nano-Drones: Visual Inertial Odometry on an ultralight ARM module

Compulab’s UCM-i.MX93 System-on-Module mounted onto the manufacturer evaluation board (Photograph taken by Sarika Ramroop)

Virtana is developing an autonomous nano-drone system that is able to autonomously navigate indoors (e.g. office buildings & warehouses), using the Crazyflie 2.1. This drone belongs to the nano-drone category [5], under 50g with a 10cm diameter (motor-to-motor). For such size-constrained drones, carrying out Visual Inertial Odometry (VIO) has historically been considered infeasible [4]. However, using the UCM-i.MX93 System-on-Module (SoM), we have been able to run an open-source VIO system using EuRoC datasets at a 4Hz state estimation update rate, with under 50% CPU use. This brings us one step closer to achieving something that is notoriously difficult: A VIO-powered navigation stack, running on an autonomous nano-drone.

Achieving this type of autonomy generally requires good localization performance to work in tandem with path planning and flight control. While many outdoor drone solutions use GPS for localization, it is not feasible for indoor localization since performance is significantly degraded due to high signal attenuation [3].

The size limitation of the CrazyFlie means that the payload is also very limited; only 15g. These two factors make autonomous indoor navigation for this type of drone especially challenging for a number of reasons:

Limited battery size - Only low-powered devices can be used.

Size constraints for any mounted components - components must be smaller than the drone. This immediately rules out many sensors and processor board options from consideration.

Lightweight components - Due to the limited payload, any additional components/boards that are added to the drone must be very light weight (<10g). This further narrows down sensor options. Any processor cannot require any heat sink outside of the lightest and smallest options.

Processor constraints - processors must be low-powered, small and lightweight to have minimal impact on flight time. This means more powerful options that are used with larger drones (e.g. NVidia’s Jetson line) cannot be used with drones of this size.

LiDAR-based approaches are used for localization solutions for more standard-sized indoor drones. However, for these nano-drones, the power requirements are too high and even the smallest LiDAR sensors still exceed the payload requirements [4]. As such, LiDAR cannot be used.

In addition to the physical constraints, the processor constraint also limits the type of algorithmic approaches that can be carried out for achieving localization. Scarramuza and Zhang [8] claim that Visual Inertial Odometry (VIO) or Visual (Inertial) Simultaneous Localization and Mapping (vSLAM) are the best alternatives to GPS and LiDAR for accurate state estimates. However, for miniaturized low-powered processors, these vision-based approaches have provided challenges for use as they are both memory intensive and computationally expensive. As such, research into alternative methods are being carried out. Examples include time-of-flight (ToF) based NanoSLAM by Niculescu et al [4] and a UWB-based approach by Pourjabar et al [6].

While we are taking a localization-based approach to autonomy, alternative solutions can potentially be used. We continue to be impressed by a DNN-based solution by Palossi et al [5], which provided some inspiration for the work being done here. They utilize a single camera with a DNN-based approach for collision avoidance and navigation, rather than implementing a more traditional localization-based approach. To do this, they use the highly parallelised, ultra-low-powered GAP8 processor from Greenwaves, with a Crazyflie 2.0 drone.

However, because of continued improvements in power consumption and processing power in more miniaturized compute devices [2] we believe it is worth exploring implementing VIO/vSLAM on more powerful modern processors. After considering a number of different processor options, we settled on working towards mounting Compulab’s UCM-iMX93 System-on-Module (SoM) to the CrazyFlie 2.1 for carrying out VIO/vSLAM. Full details about this can be found in our paper “Selecting a modern lightweight compute platform for carrying out VIO and vSLAM on a nano-drone” that was submitted to the University of the West Indies Five Islands AI Conference (2024).

Analysis & Key Results

The CrazyFlie has a maximum payload of 15g, while the UCM-iMX93 has a mass of 7g. The camera module and IMU are expected to be under 3g combined, so we expect that these components can be physically mounted to the drone.

After selecting the processor, the next step is validating if it is actually suitable for our application. If not, then we need to either select a new processor, revisit our localization approach and adjust our problem constraints based on our use case.

This evaluation process can be broken into two components:

Processing capability

Power consumption

Thus far, we have completed the processing capability evaluation. Using open-source EuRoC datasets [1], we were able to run the open-source Kimera-VIO library [7] on the UCM-i.MX93 evaluation kit (EVK). Running Kimera-VIO on the EVK required us to remove the OpenCV viz module dependency from it, and then cross-compiling it and all of its dependencies before installation.

Kimera-VIO was selected for testing due to its modular design, which allows us to more easily make changes if they are required later on. It is also actively maintained and supports both monocular and stereo VIO.

After several testing iterations, with various VIO frontend and backend configurations, we were able to find an “optimal” configuration which balanced processor and accuracy performance. Using the EuRoC V1_01 and V2_01 datasets, we were able to achieve an average state update rate of 9.4Hz state update. 93.4% CPU usage and 69.3% memory use was required to achieve this.

The CrazyFlie is quite a small drone and we will be running it at low speeds (<0.5m/s linear velocity). Hence, we believe a 4Hz update rate, similar to what was achieved by Niculescu et al [4], should be sufficient for carrying out autonomous navigation. This 4Hz target would allow us to reduce our CPU use to under 50% for VIO. We believe this should be more than sufficient for also allowing our remaining processes to run i.e.:

Sensor data capture

Path planning

Sending control commands to the Crazyflie

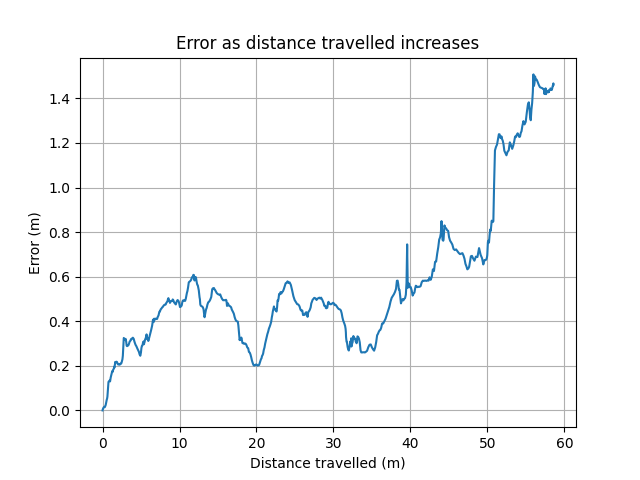

It should be noted that this monocular VIO configuration results in approximately up to +/- 0.5m in each axis, with a travel distance of >50m. While this is definitely not ideal, we still believe that this can be used to allow the CrazyFlie to navigate our office space autonomously (few obstacles, fairly high ceiling). Even though running in the stereo configuration reduces the error to +/-0.1m in each axis, the CPU use exceeds 80% for a 4.7Hz state update rate and the memory used is in excess of 90% so this is unfortunately not a feasible option.

Overall though, this is an extremely promising first step towards achieving VIO on such a size- constrained drone. So what comes next?

Validating that Kimera-VIO (monocular) can give us equal or better performance with our selected sensors (OV9282 camera sensor, BM088 IMU)

Evaluating the power consumption of our processor and its effect on battery life and flight time

Comparing Kimera-VIO performance against other suitable libraries

In the interest of speed and understanding our processor’s capabilities, not much time has been spent understanding if there are better comparable options

You can expect follow up posts as we continue our evaluation. The remainder of this blog post provides a more in-depth dive into our testing processing and results.

Overview of the Testing Process

On running Kimera-VIO with the frontend and backend configuration provided on the master branch of the repository, the processor performance can be summarized as follows (based on 5 runs with EuRoC V1_01 dataset):

4Hz maximum VIO state update rate

95% CPU use

93% memory use

This was not good enough as the processor would not have enough CPU cycles to carry out its other operations. We would expect the state update rate to drop below our required 4Hz value.

Limiting the queue size in our test script was able to reduce the memory use to around 73%. Rebuilding the Kimera-VIO and GTSAM libraries with additional optimization flags had little effect on improving the CPU performance.

VIO configuration changes

The VIO pipeline consists of two main components:

Frontend - responsible for feature detection. Feature tracks are passed to the backend to carry out optimization

Backend - carries out pose estimation using the feature tracks from the front end.

There are a number of different configurations for both the frontend and backend that affect both the speed of operations but also the accuracy of pose estimates. We believed it should be possible to tweak this to find the balance between speed and accuracy that meets our requirements.

The following are different VIO frontend and backend changes that were modified with the expectation of getting improved CPU performance.

Frontend

feature_detector_type: Feature detection is usually computationally and memory intensive. Kimera-VIO supports several options provided by OpenCV, such as GFFT, ORB and FAST.

maxFeaturesPerFrame: Features from the frontend go to the backend and are used for optimization. This variable is the maximum number that can be sent to the backend. We would expect reducing this number to reduce accuracy but speed up time for backend processing.

maxFeatureAge: Maximum age of landmarks/feature tracks where unit is frame count. Decreasing this reduces the number of frames that comparisons will need to be compared against, but can also reduce accuracy.

Backend

linearizationMode: Makes use of GTSAM, which has several options that are supported by Kimera-VIO. The backend takes the longest time to run so changing the linearization method might see an improvement in performance.

horizon: Defines how much past data is considered for the current state estimate, in seconds. Less data will be less computationally intensive, but also decrease accuracy.

Tuning for each of these parameters was carried out individually to determine a combination that might give us more optimal performance. All of our code for carrying out testing and processing output logs can be found here [provide link]. Each test was carried out using the EuRoC V1_01, V2_01 and V1_02 datasets, but only V1_01 and V2_01 were used for analysis since they were taken at movement speeds closer to what we expect.

Detailed Results and Takeaways

Frontend and backend configuration changes

A large volume of data was generated as a result of these tests. This article only includes a summary of the most relevant information. For each set of results:

Graphs are shown only for the V1_01_easy dataset, since this is generally representative of the other datasets

Graphs all show the Euclidean pose error between the state estimate and ground truth with increase in the absolute distance traveled.

The left-most image represents the error using the default settings of Kimera-VIO.

Feature Detector Type

For the V1_01_easy dataset, the following graphs show the amount of error that is accumulated with increasing distance moved by the drone. FAST is the fastest and has the least error for this dataset; ORB performs the worst.

GFTT (CPU use: 95.5% ; state estimation rate: 4.18Hz)

FAST (CPU use: 89.5% ; state estimation rate: 4.29Hz)

ORB (CPU use: 95.5% ; state estimation rate: 4.18Hz)

The results for the V2_01_easy dataset are very similar to the above, with GFTT doing worse for CPU use (194%) and the ORB drift performance being the best (< 0.8m error); FAST has slightly worse drift than ORB.

Overall, FAST seems like the feature detector that we should be using.

maxFeaturesPerFrame

300 (CPU use: 95.5% ; state estimation rate: 4.12Hz)

200 (CPU use: 95.5% ; state estimation rate: 5.37Hz)

100 (CPU use: 94.5% ; state estimation rate: 7.78Hz)

The accuracy trends are the same for V2_01_easy, with error becoming worse as the max number of features decreases, but with comparable CPU use. However, the state estimate rate increases as the value decreases. This is likely due to the queue now being processed faster on the backend, which is where the speed up is observed (average time per frame at 200 maximum features is 180ms, compared to 240ms at 300).

maxFeatureAge

25 (CPU use: 96% ; state estimation rate: 4.14Hz)

20 (CPU use: 96% ; state estimation rate: 4.20Hz)

15 (CPU use: 94% ; state estimation rate: 4.24Hz)

Decreasing the maxFeatureAge value gives a small reduction in CPU utilization with a negligible difference in accuracy for all datasets considered.

Linearization Mode

Hessian (CPU use: 95.5% ; state estimation rate: 4.13Hz)

Implicit Schur (CPU use: 95.5% ; state estimation rate: 4.12Hz)

Jacobian_Q (CPU use: 95.5% ; state estimation rate: 4.12Hz)

Jacobian_SVD (CPU use: 95.5% ; state estimation rate: 4.10Hz)

For all linearization approaches, the CPU use and state estimation rate are about the same, but the Implicit Schur gives the least error overall for both V1_01_easy and V2_01_easy.

Horizon

At 6 seconds, the maximum euclidean distance error for V1_01_easy is 1m but at 5 seconds the maximum error is 25m. Reducing this from 6 seconds introduces more error than can be tolerated.

Optimised Configuration

From the results in the previous section, we believed the following configuration would give us improved processor performance and good/comparable localisation estimates:

Feature detector type: FAST - best accuracy

Max age down to 15 - small speed up while still giving reasonable results

Max feature reduced to 100 - significant speed up and other configuration changes likely mean the reduction in accuracy will be offset.

Implicit schur - gives less error than other linearisation options, with comparable CPU performance

With this VIO configuration, we were able to obtain a 9.4Hz VIO state update rate with a CPU use of 93.5% and memory use of 69.3%. As mentioned previously, our target update rate is 4Hz, and at this rate the CPU use for monocular VIO should be less than 50%. A breakdown of VIO components are given below, and here we were able to observe our fastest backend frame times:

----------- # Log Hz {avg +- std } [min,max]

Mono Data Provider [ms] 0

VioBackend [ms] 715 9.40173 {102.116 +- 31.9222} [1,286]

VioFrontend Frame Rate [ms] 2145 28.5101 {18.9385 +- 4.48318} [11,47]

VioFrontend Keyframe Rate [ms] 715 9.49646 {34.5566 +- 8.22637} [18,106]

VioFrontend [ms] 2861 37.9408 {22.9755 +- 13.9132} [11,106]

backend_input_queue Size [#] 716 9.48970 {6.70810 +- 1.90297} [1,18]

data_provider_left_frame_queue Size [#] 2862 38.0969 {4.98532 +- 0.00000} [1,5]

frontend_input_queue Size [#] 2861 38.0149 {4.99511 +- 0.00000} [1,5]

The graphs below show the error in the state estimate. The graph on the left shows the euclidean distance error, while the graph on the right shows the error in each axis.

The error in each axis is generally within +/-0.5m. Because our office has relatively few obstacles we believe this is sufficient for navigation and that this error can be accounted for.

Follow-up work

So far, Kimera-VIO is the only VIO/vSLAM system that has been tested on the UCM-iMX93 SoM. Because the overall goal of this task was to determine if it was possible to run such a system on the SoM, emphasis was not placed on finding the best algorithm. Additionally, because testing was only done with datasets, the results are not 100% representative of the final system (cameras, IMU, drone, operational velocity etc.) that we will be using.

As such, there are several follow up work streams that will need to be carried out in the future before we can determine if Kimera-VIO is the final localization solution for our drone. These are given below:

Testing Kimera-VIO with live data using time synchronized camera and IMU data from the final (or similar) sensor selection. The Kimera-VIO test script that was used was constructed for use with EuRoC. A custom script will need to be created to ingest this live data. Additionally, this work is blocked by IMU and camera hardware integration with the SoM.

Comparing Kimera-VIO with other VIO/vSLAM systems that might be better suited for our application and/or processor. The UCM-iMX93 has an AI/ML Neural Processing Unit that should be optimized for ML-based approaches, none of which have been considered thus far. There may also be other newer algorithms that are more performance optimized.

Analyzing power use of the SoM and the effect on flight time.

We also look forward to submitting upstream pull requests with changes to Kimera-VIO and dependent libraries to allow for easy cross-compilation for aarch64 embedded devices.

Conclusion

As part of evaluating whether our processor, the UCM-iMX93 can be used to carrying out VIO/vSLAM on an extremely size-constrained drone, we need to assess:

whether it can actually run VIO/vSLAM

whether the power consumption is low enough that our drone is still able to achieve a reasonable flight time

Running the Kimera-VIO library with EuRoC datasets has given us good reason to believe we can run VIO/vsLAM on the UCM-iMX93. Based on our test results, a 4Hz state update rate should utilize less than 50% CPU, safely allowing for all other operations to take place. Next steps for this body of work include carrying out this aforementioned power analysis and making final decisions about the VIO system that we will be using for our autonomous flight task.

Acknowledgements

This work has been supported by a number of people at Virtana, without whom this could not have been possible. This includes Vijay Pradeep with overall technical project guidance, Enrique Ramkissoon, who was integral in the process for selecting the UCM-i.MX93 SoM and Andre Thomas and Nicholas Chamansingh who were both essential for helping to shape the overall goals of the project and project plan.

We thank the Caribbean Industrial Research Institute (CARIRI) for providing a grant for this project through their Shaping the Future of Innovation program. This was essential in making a lot of this work possible.

References

[1] Burri, M., Nikolic, J., Gohl, P., Schneider, T., Rehder, J., Omari, S., Achtelik, M. W., & Siegwart, R. (2016). The EuRoC micro aerial vehicle datasets. The International Journal of Robotics Research, 35(10), 1157-1163. 10.1177/0278364915620033

[2] Chen, Y., & Kung, S.Y. (2008). Trend and Challenge on System-on-a-Chip Designs. Journal of Signal Processing Systems, 53(1), 217–229. https://doi.org/10.1007/s11265-007-0129-7

[3] Kim Geok, T., Zar Aung, K., Sandar Aung, M., Thu Soe, M., Abdaziz, A., Pao Liew, C., Hossain, F., Tso, C., & Yong, W. (2021). Review of Indoor Positioning: Radio Wave Technology. Applied Sciences, 11(1), 279. https://doi.org/10.3390/app11010279

[4] Niculescu, V., Polonelli, T., Magno, M., & Benini, L. (2024, April 15). NanoSLAM: Enabling Fully Onboard SLAM for Tiny Robots. IEEE Internet of Things Journal, 11(8), 13584-13607. 10.1109/JIOT.2023.3339254

[5] Palossi, D., Loquercio, A., Conti, F., Flamand, E., Scaramuzza, D., & Benini, L. (2019). A 64-mW DNN-Based Visual Navigation Engine for Autonomous Nano-Drones. IEEE Internet of Things Journal, 6(1), 8357-8371. 10.1109/JIOT.2019.2917066

[6] Pourjabar, M., AlKatheeri, A., Rusci, M., Barcis, A., Niculescu, V., Ferrante, E., Palossi, D., & Benini, L. (2023). Land & Localize: An Infrastructure-free and Scalable Nano-Drones Swarm with UWB-based Localization. IEEE Computer Society. 10.1109/DCOSS-IoT58021.2023.00104

[7] Rosinol, A., Abate, M., Chang, Y., & Carlone, L. (2020). Kimera: an Open-Source Library for Real-Time Metric-Semantic Localization and Mapping. IEEE Intl. Conf. on Robotics and Automation (ICRA). https://doi.org/10.48550/arXiv.1910.02490

[8] Scaramuzza, D., & Zhang, Z. (2019). Visual-Inertial Odometry of Aerial Robots. Springer Encyclopedia of Robotics, 1(1), 1-13. https://doi.org/10.48550/arXiv.1906.03289