PAST PROJECTS

REMOTE AUDIO MONITORING IOT DEVICE

Technologies Used and Focus Areas:

Python (PyAudio, PySerial, FastAPI, etc)

Bash

Docker

React / Javascript

Rust (Axum)

Raspberry Pi

Linux

Advanced Linux Sound Architecture (ALSA)

Tailscale

Project Staffing:

Primary Contributors: 2 Software Engineers (full-time)

1 Technical Program Manager (part-time)

Project Duration: 2 months

Client Location: San Francisco, USA

The client needed a single device that allowed a user to remotely play selected audio via an attached speaker, record GPS and record any audio heard at the remote site. The user would also be able to remotely upload audio files on the device for playback. This all had to be done securely using a simple interface and bundle the different data that was collected.

Virtana designed and delivered an appliance that can receive user input and allow a user to carry out remote experimentation seamlessly.

Workstreams included:

Selecting appropriate hardware for the system

Creating the overall system design from user front–end to hardware interaction for data collection and feedback

Integrating hardware from a Docker container on the Raspberry Pi (microphone, speakers, gps, I/O controlled relay for power) and bundling GPS and audio recording output, as well as additional metadata and logs

Creating a simple React JS front–end for enabling users to start/stop the appliance, upload files and receive status updates

Creating a Rust server backend for receiving input from the React front–end and routing data as needed

Creating a FastApi python interface to handle hardware related commands

Creating all setup scripts and services for launch on device start up

Setting up Docker for inter-container communication and secure remote access via Tailscale

System integration and end-to-end testing, from setup to data collection

The Virtana team worked autonomously for all of these workstreams and provided updates regularly to client stakeholders via a dedicated Slack channel.

EXTRINSIC CALIBRATION OF RIGIDLY-ATTACHED CAMERAS WITH NON-OVERLAPPING FOVS

Technologies Used & Focus Areas

Python (OpenCV, ROS, JAXOpt) & Bash

Linux

Docker

Nonlinear optimization with JAXOpt

Camera projection, 3D geometry, & computer vision

Camera calibration

Project Staffing

Primary Contributors: 2 Software Engineers (full-time)

Technical Oversight: 1 Principal Engineer (part-time)

Project Duration: 3 months

Client Location: San Francisco, USA

Caption: A visualization tool showing the poses of 4x stereo cameras and 3x calibration boards, before and after a real-world extrinsics calibration.

Virtana proposed, designed and implemented a calibration station and purpose-built optimizer to calibrate the extrinsics for multiple stereo cameras with non-overlapping fields of view, thereby increasing the accuracy of a client's mobile robot’s VSLAM stack.

Workstreams included:

Designing, sourcing and fabricating custom calibration boards with less than 0.1% error from print shops in San Francisco

Writing python scripts to sync 4x stereo cameras driven by a ROS stack and collect rgb image data for calibration. All data was provided by the client in the form of ROSbags, thereby eliminating the need to replicate any hardware setup on Virtana's end.

Validating snapshots suitability by checking for sufficient calibration target detection

Implementing a graph-based extrinsics initializer using solvePnP to obtain more accurate extrinsics inputs to the optimizer

Packaging all the input data into a JAXOpt problem that minimizes the reprojection error to ~1px using a LM solver

Implementing a 2D & 3D visualizer to compare the poses and reprojected points before and after optimization

Setting up a GitLab CI/CD pipeline to auto-build and deliver updated docker images to the end users on each merge into the main branch.

Authoring a manual for the data collection and optimization process to improve the end user's ramp-up experience

The Virtana team maintained frequent communication with the client via a dedicated slack channel and weekly virtual syncs.

ROBOT PERCEPTION STACK CO-DEVELOPMENT

Technologies Used & Focus Areas:

C++, Python, & Golang

Linux

Nonlinear optimization & Scipy

Robot kinematics, camera projection, 3D geometry, & computer vision

Camera & Robot Calibration

Project Staffing:

Primary Contributors: 4 Software Engineers (full-time)

Project Duration: 2 Years

Client Location: San Francisco, USA

Caption: This picture shows a very similar robot system, including a Universal Robots arm, conveyor belt, and additional sensors. Due to client confidentiality requirements, the actual image cannot be shown.

We provided support in developing & maintaining the entire perception stack for a pick-and-place factory automation solution. This included rgb camera & depth camera drivers, custom middleware integration, and multi-camera multi-robot-arm system-level calibration. Through weekly syncs, impromptu design discussions, and systematic code reviews, Virtana operated with tight coordination with the client’s technical lead, significantly accelerating their existing roadmap, while also providing ongoing software maintenance support for the newly developed assets. And, given this tight coordination, Virtana was able to deliver high quality, high impact software, all while accommodating rapid client-side changes in their roadmap and requirements.

FACTORY WORKCELL CUSTOM SIMULATION

Technologies Used & Focus Areas:

Unity3d Game Engine

Pybullet

Linux & Windows

Google Cloud Platform

C#

Robot Kinematics

Project Staffing:

Primary Contributors: 3 Software Engineers (full-time)

Technical Oversight: 1 Principal Engineer (part-time)

Project Duration: 3 Years

Client Location: San Francisco, USA

Caption: Rendering of the developed simulation toolchain and framework, demonstrating a depalletization use-case for a logistics application.

A client required a custom simulator to test their factory automation offering, increase their customers’ confidence, and accelerate their own internal development. Virtana worked closely with the client engineering team & product managers to develop a simulator solution in the Unity3d game engine that was tightly integrated with the existing software codebase. Though Virtana was able to deliver an initial implementation within a matter of months, the Virtana team continued to work closely with the client to meet their evolving and growing needs. This included simulating 2D cameras, 3D depth sensors, robot arms, pneumatic suction cups, finger grippers, conveyor belts, and other production line peripherals, for a variety of different workcell scenarios & configurations. Virtana also managed cloud deployments of the simulator and associated sw infrastructure, enabling client engineers to connect on-demand with minimal effort.

CUSTOM SIMULATOR FOR OUTDOOR GROUND ROBOTS

Technologies Used & Focus Areas:

Python

Linux

ROS1 (Robot Operating System)

NumPy, vectorization, and python performance optimization

Project Staffing:

Primary Contributors: 2 Software Engineers (full time)

Technical Oversight: 1 Principal Software Engineer (part time)

Project Duration: 10 months

Client Location: San Francisco, USA

Caption: Clearpath Husky UGV traversing uneven terrain similar to what was simulated by the Virtana team. Due to client confidentiality requirements, the actual client vehicle could not be shown.

A client needed a lightweight simulator that modeled how outdoor vehicles traversed uneven & rough terrain. The Virtana team drove the design and development of this simulator, while working closely with the client team through weekly update meetings, design reviews, and code reviews. The final system met all client needs and was successfully integrated tightly into the client’s production-grade codebase & systems.

DRONE DATA COLLECTION AND SENSOR SELECTION

Technologies Used & Focus Areas:

Drone Field Operations & Piloting

Computer Vision

C++

Camera, sensor, & lidar driver development

Project Staffing:

Primary Contributors: 1 Software Engineer (full-time) & 1 Technical Project Manager (half-time)

Technical Oversight: 1 Principal Engineer (part-time)

Project Duration: 4 Months

Client Location: San Francisco, USA

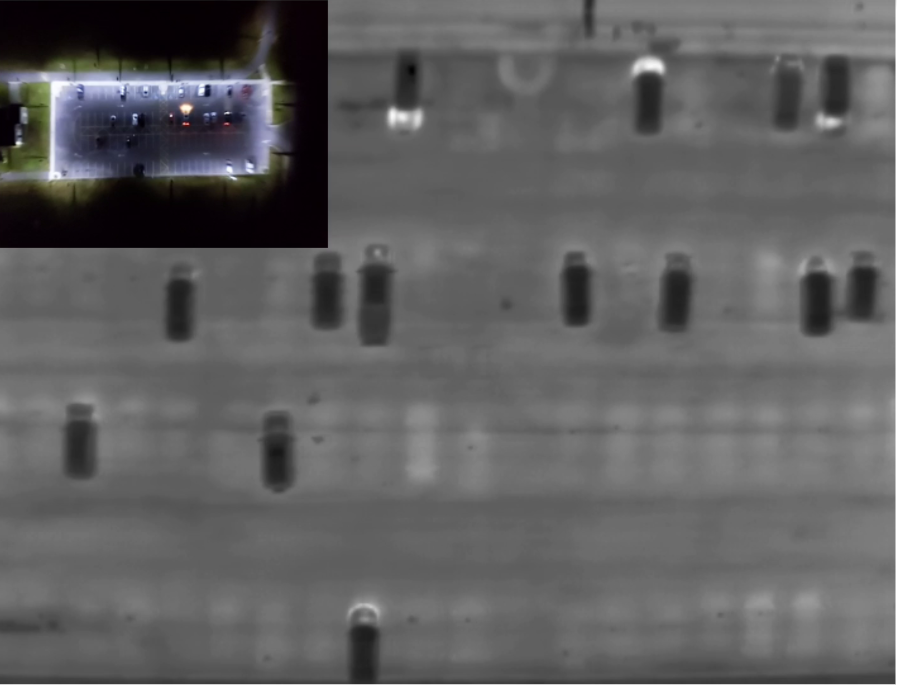

Caption: Downward-facing long wave infrared imagery, captured as part of the exploration and sensor selection process.

A drone company was developing a product for a new use case, and required support in choosing what sensing modalities and specific sensor specs would be best suited for this new use case. Virtana began a broad industry review of what sensors & modalities could meet the client’s needs, downselected the options to a few modalities and models, and field–tested these sensors by flying them in canonical situations that matched the client’s use case. This project required rapid iteration, which was facilitated by constant communication with the client to quickly adjust the requirements and approach based on every set of new experimental and analytical findings. Virtana’s 100s of experimental datasets and additional analysis gave the client a much deeper understanding and intuition for the tradeoffs between various sensors and specs. Virtana was able to also successfully propose a sensor suite that met the client’s needs, with experimental data to support the proposal.

ROS SIMULATION & NAVIGATION STACK INTEGRATION

Technologies Used & Focus Areas:

C++

Linux

ROS1, Gazebo, & ROS Navigation Stack

2D & 3D Lidar sensor selection & software integration

Project Staffing:

Primary Contributors: 2 Software Engineers (full time)

Technical Oversight: 1 Principal Software Engineer (part time)

Project Duration: 5 Months

Client Location: San Francisco, USA

Caption: 3D lidar data generated from a similar sensor & system, which originally appeared in the PCL library’s documentation. Due to client confidentiality requirements, Virtana’s experimental results could not be shown.

A client wanted to demonstrate the navigation & obstacle avoidance capabilities of their novel robot platform. As such, the Virtana team introduced ROS into their existing codebase, and integrated the ROS navigation stack into their software platform. Virtana also integrated the Gazebo robot simulation framework into their software platform, enabling efficient validation of the navigation capabilities. As the integration progressed, Virtana supported not only the original scope of work, but also helped troubleshoot & fix various issues in the client’s entire software stack that the navigation system was dependent on, including embedded microcontroller code, motor controller protocols, and various timing and communication issues.

CUSTOM IOT HARDWARE, EMBEDDED, & CLOUD DEVELOPMENT

Technologies Used & Focus Areas:

AWS - IoT Core, Amplify, DynamoDB, Cognito, AppSync, CloudWatch

FreeRTOS

Analog sensor integration

PCB Development

ESP32 Embedded Software Development

React Web Framework

Node.js

Project Staffing:

Primary Contributors: 3 Software Engineers (full time)

Product Manager: 1 Product Manager (part time)

Project Duration: 2 years

Caption: ESP32 development board, used as part of the initial development of the device (from Soselectronic).

Virtana developed a custom, low cost IoT device to meet the needs of a specific public-sector entity. Virtana drove the entire technical & product roadmap, and worked closely with non-technical end-users to ensure that each iteration of the system was getting closer to meeting their needs.

FEASIBILITY STUDY FOR 3D LIDAR SENSOR PLACEMENT

Technologies Used & Focus Areas:

Linux

Blender

Gazebo

ROS

Project Staffing:

Primary Contributors: 1 Software Engineer (full time)

Technical Oversight: 1 Principal Software Engineer (part time)

Project Duration: 1 Week (was part of a larger client engagement)

Client Location: San Francisco, USA

Caption: The Velodyne Puck VLP-16 is one of the 3D lidar sensors that was being analyzed during this sensor selection and placement effort.

A client wanted to integrate multiple 2D and 3D lidar sensors on their robot platform to provide adequate coverage in the proximity of their robot. Using the client’s CAD models as a starting point, Virtana ported the robot model into Gazebo and analyzed the resulting sensor coverage for various sensor placements and environments. This enabled the client to move forward with a design that required only a single 3D lidar sensor and a few much cheaper 2D lidar sensors to provide sufficient coverage in blindspots identified by Virtana’s analysis.

FEASIBILITY STUDY AND DE-RISKING OF NVIDIA’S FLEX PARTICLE ENGINE

Technologies Used:

C++

Linux & Windows

NVidia Flex SDK

Unity3d Game Engine

Project Staffing:

Primary Contributors: 1 Software Engineer (half-time)

Technical Oversight: 1 Principal Software Engineer (part-time)

Project Duration: 1 Month

Client Location: San Francisco, USA

Caption: Example scene that is included with the NVidia Flex particle engine. Due to client confidentiality requirements, the actual experiments could not be shown here.

A client was evaluating various particle-based simulators and wanted a more informed technical opinion as to whether NVidia’s Flex particle engine would meet their requirements. The Virtana team developed multiple proof of concepts on top of NVidia Flex to validate whether or not it would meet the client’s needs. Virtana delivered a final report containing all of our findings, as well as our experimental & proof-of-concept code.